WP4-39: Difference between revisions

| Line 27: | Line 27: | ||

|- | |- | ||

| TRL || 6 | | TRL || 6 | ||

|- | |||

| Contact || dfuentes at hi-iberia.es | |||

|} | |} | ||

Latest revision as of 08:45, 10 March 2023

Simulated data aggregator supporting intelligent decision in computer vision components

| ID | WP4-39 |

| Contributor | HI-IBERIA |

| Levels | Functional |

| Require | AirSim built on Unreal Engine |

| Provide | Simulator-based data aggregator built over AirSim generating high amounts of training data (RGB images) to support any computer vision component for intelligent decision in drones. |

| Input | Simulation scenario parameters:

Drone configuration parameters in JSON format:

|

| Output | Simulated RGB images (or point-cloud as a flat array of floats) |

| C4D building block | (Simulated) Data Acquisition |

| TRL | 6 |

| Contact | dfuentes at hi-iberia.es |

Detailed Description

Computer vision is a significant driver in the new era of drone applications, but developing and testing computer vision components for drones in real world is an expensive and time-consuming process. This issue is further exacerbated by the fact that drones are often unsafe and expensive to operate during the training phase. Additionally, one of the key challenges with these techniques is the high sample complexity - the amount of training data needed to learn useful behaviors is often prohibitively high, but in fact, it is needed to collect a large amount of annotated training data in a variety of conditions and environments in order to utilize recent advances in machine intelligence and deep learning. This unfortunately involves not only developing the proposed ML algorithms but also requires vast amounts of time dedicated for the development of an infrastructure able to generate training models in a variety of environments. Consequently, the design, deployment, and evaluation of computer vision components for drones becomes a complex and costly endeavor for researchers and engineers, since it requires the exploration of multiple conditions and parameters through repeatable and controllable experiments.

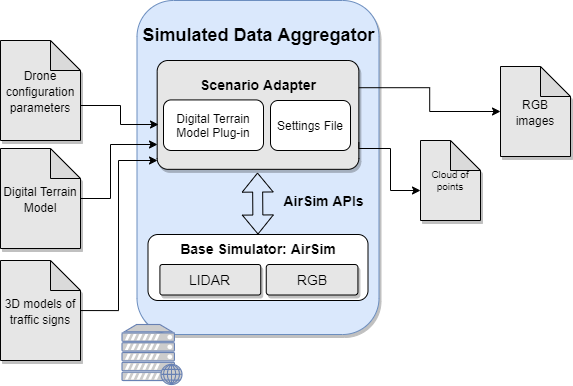

In the context of C4D, the proposed component is a simulator-based data aggregator built over AirSim which intends to generate high amounts of training data with the objective of supporting any computer vision component for intelligent decision in drones. This component will allow speeding up the constructive process of a civil infrastructure while saving costs, by reducing the need to perform multiple data collection campaigns to get real training data. Additionally, this component could assess the need of different cameras/sensors (RGB, LIDAR, ...) integrated in the real drone, starting from the simulated clouds of points generated by the component. This would allow detecting which kind of camera could provide more suitable results for the analysis before launching the real drone flight.

In the context of UC2 – Construction, the specific role of the simulated data aggregator is to generate a vast amount of annotated training data which allows us to train the convolutional neural networks which will be implemented in the Computer Vision Component for Drones, which is being developed within the task 3.3. Particularly, this simulated data aggregator will generate a vast amount of RGB images (or point-cloud as a flat array of floats along with the timestamp of the capture and position) which intend to represent the real scenario from scenario-tailored input data, that is, the specific drone configuration parameters, the digital terrain model and the 3D models (CAD files) of the objects to detect, being traffic signs in the particular case of the UC2 - Demo 1. Such RGB images will serve to train the convolutional neural networks, and hence, the DL algorithms of the Computer Vision Component for Drones in an early way.

Considering the C4D Reference Architecture Context, the role of the simulator-based data aggregator is clearly referred to the Data acquisition building block in the Payload management block. Particularly, this component contributes to the reference architecture by simulating the behaviour of the Data acquisition building block, since it allows generating simulated payload data which serves as training payload data for the Data Analytics building block in the Data Management block.

Additionally, the simulator-based data aggregator contributes to the UC2-D1-FUN-09-Specialized software for georeferenced point-cloud creation as well as to the following requirements of the UC2-DEM1:

- UC2-DEM1-FUN-01 - The drone system shall capture a high-density point cloud.

- UC2-DEM1-FUN-02 - The drone system shall capture RGB data of the surface.

- UC2-DEM1-FUN-03 - The point cloud shall be in an open format such as LAS.

Therefore, such component also addresses the following KPIs for the UC2-DEM1:

- UC2-D1-KPI-T1 - Recognition of work elements through AI: Detection of main work elements position in the road through point cloud

- UC2-D1-KPI-T2 - Recognition of work elements through AI: Detection of the total number of elements.

Technical specification

From a technical point of view, the simulated data aggregator has been implemented over already existing tools: Unreal Engine and AirSim. Unreal Engine is a complete suite of creation tools for game development, architectural and automotive visualization, linear film and television content creation, broadcast and live event production, training and simulation, and other real-time applications. AirSim is a simulator for drones, cars and more, built on Unreal Engine. It is open-source, cross platform, and supports software-in-the-loop simulation with popular flight controllers. It is developed as an Unreal plugin that can simply be dropped into any Unreal environment. The recommended hardware requirements specified for the simulation tools are:

- Unreal Engine:

- Operating System: Windows 10 64-bit

- Processor Quad-core Intel or AMD, 2.5 GHz or faster

- Memory 8 GB RAM

- Graphics Card DirectX 11 or 12 compatible graphics card

- RHI Version:

- DirectX 11: Latest drivers

- DirectX 12: Latest drivers

- Vulkan: AMD (21.11.3+) and NVIDIA (496.76+)

- AirSim Simulator:

- Operating System: Windows 10 64bit

- CPU: Intel Core i7

- GPU: Nvidia GTX 1080

- RAM: 32 GB

However, for the implementation of the Unreal+AirSim simulator server under the Comp4Drones domain, the technical specifications required are the following regarding software and hardware infrastructures:

- Software:

- Windows 10

- Python 3.7.9

- Unreal engine Version 4.25

- AirSim 1.3.0

- Hardware:

- Operating System: Windows 10 64bit

- CPU: Intel Core i5

- GPU: Nvidia GTX 980

- RAM: 32 GB

Regarding the interfaces, there are two interfaces: the scenery creator based on Unreal Engine and AirSim for the simulation itself. Each of the interfaces requires specific inputs and provides specific outputs as it is descripted below:

- Interface of scenery creator, Unreal engine:

- Input: CAD 3D model signals.

- Output: Different scenarios of a road construction.

- AirSim Simulator:

- Input:

- Road construction scenery compiled (digital terrain model).

- AirSim setting file which contains:, the type of vehicle has to be specified, in this case, a drone; the camera settings (Resolution, angle degree), and the LIDAR sensor settings (Points per seconds of the cloud points, and number of channels).

- Drone fly script: Python file that describes the movement of the drone, the speed, and the images per second taken.

- Output: RGB images of drone’s fly simulated. Array of LIDAR cloud points in float32 (in case of using a LiDAR camera).

- Input:

Considering the inputs required as well as the outputs obtained, the data flow of the Simulated Data Aggregator component is as follows:

- Inputs required are: drone configuration parameters, digital terrain model and 3D models of traffic signs (objects to be detected).

- Creation of a settings file to configure the simulation parameters: simulation mode, view mode, time of day to simulate the sun position, origin geo-point, camera settings (selection of Lidar or RGB), and vehicle settings among others. (*Vehicle settings parameter is the one including the drone configuration parameters taken as input.).

- The digital terrain model is compiled through an Unreal plug-in provided by Airsim.

- The settings file together with de digital terrain model and the 3D models of traffic signs to be represented are sent to the AirSim simulator through the AirSim APIs.

- The scenario is simulated containing the traffic signs placed in the terrain provided and it is recorded from a drone (with an RGB or a Lidar Sensor) considering the parameters indicated in the settings.

- The simulation is retrieved in the Scenario Adaptor from the base simulator and it provides a Point-Cloud as a flat array of floats along with the timestamp of the capture and position or RGB images (depending on the component needs) to be used as input for the CNN network in the computer vision system developed under WP3.

Following this, for the development process of the simulation environments has consisted of the following phases:

- First of all, a study phase of the real environments has been carried out. In this phase, the images provided of real drone flights captured during the flight campaigns performed in Jaén (Spain) have been analysed. The study includes also the logic about the signals position which has been evaluated thanks to the analysis of road real works in order to enlarge the variety of real images.

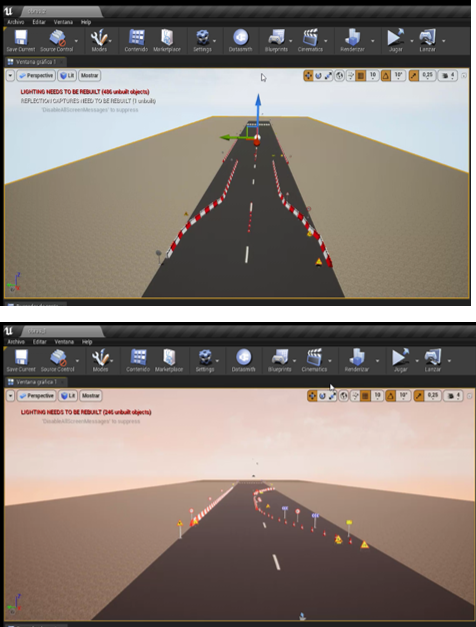

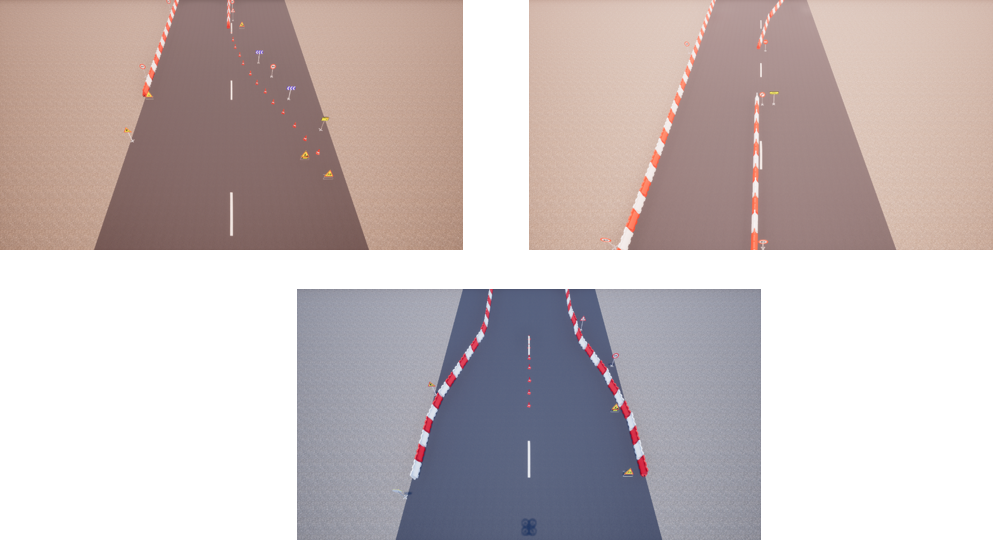

- Once the study was completed, simulated environments of different realistic construction site scenarios were performed manually. Thanks to these environments, it has been possible to perform different flights in a short period of time, without the need of carrying out and deploying real flights with drones in real pilot sites. These simulated flights have been performed with different degree of luminosity and so, different levels of variability of the same scenarios in different periods of the day have been obtained. The configuration of the simulations with different luminosity levels has been very relevant since shadows can alter the result of the recognitions producing false positives. Otherwise, with real flights, this would have been to be accomplish with many identical flights at different times of the day and so, with time-consuming and a high economic cost. Some examples of simulated environments are:

- Once these environment examples have been compiled and executed on the server, the next step has been to use a python client with the AirSim library running in the flight script mentioned above, in order to create a dataset. This script in the development process is essential, as it automatically generates the images to expand the dataset. Following figures show some examples:

- Finally, the last step of the development process is to label the generated images to retrain the existing model.

Application and Improvements

Particularly, in the scope of UC2 (and in concrete in Demo 1), the simulated data aggregator has contributed to speed up the data collection phase, which has been cancelled for several issues: the COVID-19 pandemia delayed the initial planning on the drones flights, and the withdrawal of the drone and scenario providers partners within the UC2 only allowed to perform one data collection campaign. Then, the component allows to generate a high amount of data from scenario-tailored simulated drone flihts, that have support the training of the computer vision system, without the need to perform multiple data collection campaigns to get training data. In consequence, cost savings and the possibilitiy of continuing with the initial plans and developments envisaged for the project have been accomplished thanks to the data aggregator simulator. Beyond the scope of UC2, the data aggregator simulator is motivated by the high costs of performing a drone flight campaign for training a Computer Vision System, and by the time-consuming for collecting a large amount of annotated training data in a variety of real conditions and environments for covering a higher number of scenarios. In consequence, the application of the data aggregator simulator for generating data can be tailored to any domain for the training of IA systems which needs a wide amount of raw data to train the algorithms. Considering the work carried out for the development and deployment of the simulated data aggregator, one of the most relevant tasks to carry out in Artificial Intelligence field is the collection and labelling of data that will be used to train the model. In this case, the labelled traffic sign datasets available for training AI models for detection and classification are composed of images with frontal perspective, as shown in Figure 6. However, the perspectives of the drone images will range from zenith to swooping depending on the angle at which the camera is positioned. This configuration parameter changes the image perspective and it can impact on how the AI model detects and classifies objects in the image.

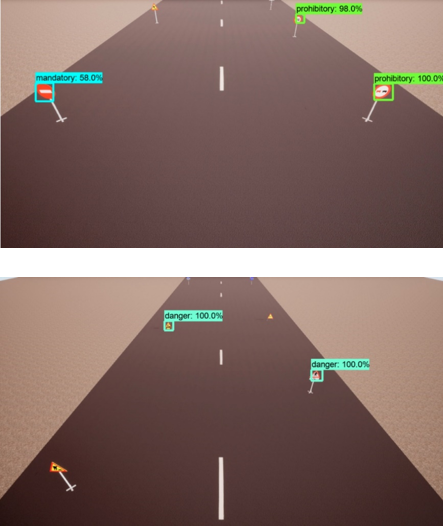

Therefore, it is necessary to train the models with sets of frontal perspective images and to test them with images in a top-down perspective to check if it performs the detections and classifications correctly. In this case, the simulator provides a solution to obtain test environments to learn about the behaviour of the model in this type of images, being able to make changes such as the altitude of the drone or the pitch angle of the drone's camera in order to adapt the model as much as possible to the real world. Figure 7 and Figure 8 show some examples of this test.

Therefore, the use of simulated environments enables the inclusion of variations in the different simulated scenarios in a very simple way, which can be translated into advantages and expected improvements over imaging with drone flights in real environments:

- Adaptation to different environments

An essential aspect when generating a dataset is the variability in the data. In order to achieve this in an image dataset, it is necessary to change elements such as element arrangement (Figure 3, Figure 4 and Figure 5), luminosity, opacities, meteorological effects such as rain or snow, etc. In a simulated environment, it is sufficient to apply changes in the simulator configuration, changes in the layout or creation of new elements to obtain the desire conditions. However, in a real environment several flights have to be performed based on the weather conditions, and manually change the layout by moving objects around again and again which implies high costs in money and time.

- Cost and Time savings

The ease of generating changes in the simulated environments is translated directly into economic and time savings, since the physical elements required to create the environment are reduced, such as the personnel necessary for the recording of flights, drones renting, personnel specialized in flying these drones, etc. In addition to significantly reducing the cost related to labelling, as described in the following point.

- Automation of labelling

Automating a laborious task such as labelling, allows to significantly reduce the time required to obtain a sufficient data set for training and dispense with cost of personnel to carry out this task. In the specific case of the AirSim simulator used for this project, it is possible to obtain segmented images of the environment, such as the one represented in Figure 9, which allow labelling of each object.

However, since the user is not yet allowed to control the assignment of RGB colour codes to the objects in the environment, this process would be in a "semi-automated" phase. According to the documentation of the simulator itself , work is in progress to fully automate this task. Once labelling automation is achieved, the generation of new datasets would be accelerated and both the time and monetary cost would be significantly reduced by avoiding human-generated labelling.

- Transfer Learning

The adaptation of pre-trained base architectures with data sets very close to the target data is essential to achieve a model fully prepared for the task at hand. For this, thousands of labelled images of the same type and composition as the target images are required, a task in which the simulator is of great help in order to generate these images quickly and economically, as well as to accelerate the labelling process as previously indicated.

- Adaptation to future changes

Once the objective of the AI model has been achieved, it must continue to evolve according to future changes in the real environment, for which it must be retrained with the modifications and variations in the environment. Therefore, in order to represent such changes in the training data sets, the simulator plays a key role.